Adobe Updates Neural Filters, Brings ‘Portrait Mode’ To Photoshop

![]()

The latest update for Adobe Photoshop includes a new Neural Filter called Depth Blur that lets photographers choose different focal points and will blur the background of photos intelligently, effectively, bringing the idea of a smartphone’s “Portrait Mode” to the desktop application.

Within Photoshop, it is already possible to create this effect manually by using various blurs and manually masking subjects, but that process can be a lengthy and tedious process for most users. So an automated tool like this, while not instant, is still a faster “starting point” for a lot of situations where the need to blur the backgrounds may become important.

The new feature works by creating a depth map of your image which can be adjusted with an artificial depth of field. The effect can give images that were not taken with a wide-open fast lens the appearance of one. This new tool arrives as part of the May 2021 update and can be accessed under the “Filters Menu” in the “Neural Filters” section.

![]()

The Depth Blur filter still shows as in “Beta” and acts as such, as it still seems to struggle to identify objects in the frame and focal planes. That said, the messy blurs can easily be cleaned up with a quick mask.

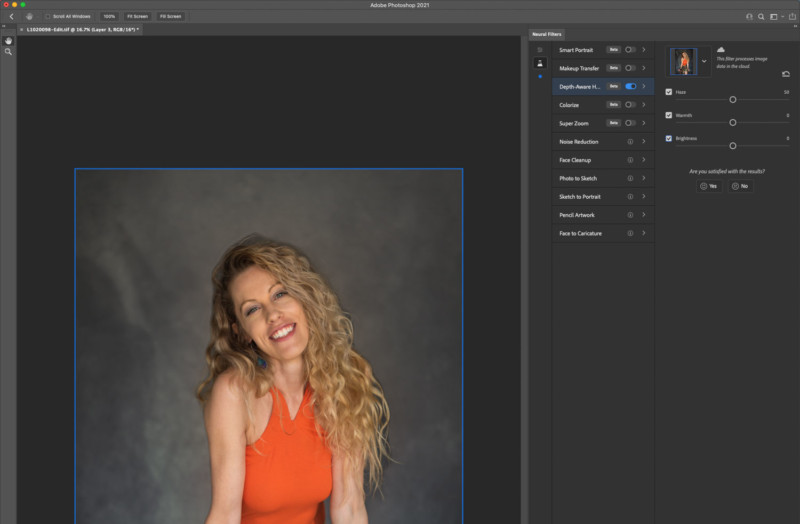

The filter itself has several sliders that can be used to edit the amount of effect it has on the image which include Blur Strength, Focal Range, Focal Distance, as well as Haze, Warmth, and Brightness adjustment sliders. While making adjustments to these sliders, a preview is displayed on the top of the filter, where anywhere on the image can be clicked to select a manual focal point around which the depth of field adjustments will be made.

The new Depth Blur filter appears to possibly be an updated version of the originally launched Depth-Aware Haze tool seen in Photoshop versions prior to the May 2021 update, which contained just the Warmth, Haze, and Brightness sliders as can be seen in the screenshot above. As this version is still in Beta, photographers can likely expect it to evolve at least somewhat before being officially released.

It is worth noting that these Neural Filters rely on cloud-based computing, meaning it could take a while to see the adjustments update and apply to the image on screen. As an added benefit though, like most of these cloud-based plugins and machine learning tools, it is likely that it only get better with time and after more people use it.

from PetaPixel https://ift.tt/2RBoJj2

via IFTTT

Comentarios

Publicar un comentario